Gpu parallel computing for machine learning in python 2025

Gpu parallel computing for machine learning in python 2025, Demystifying GPU Architectures For Deep Learning Part 1 2025

$100.00

SAVE 50% OFF

$50.00

$0 today, followed by 3 monthly payments of $16.67, interest free. Read More

Gpu parallel computing for machine learning in python 2025

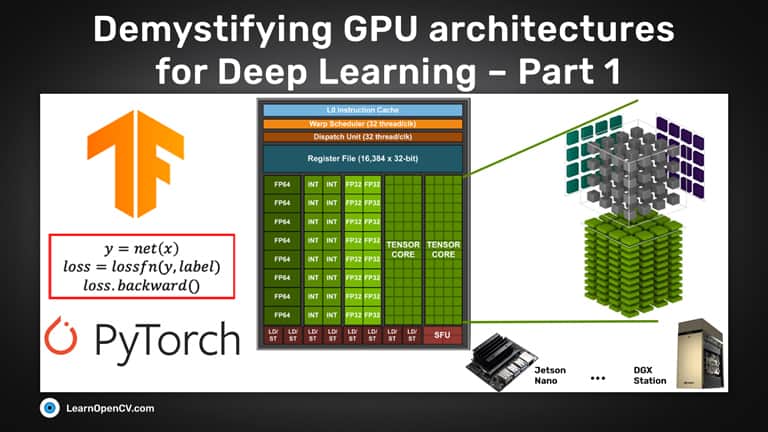

Demystifying GPU Architectures For Deep Learning Part 1

What is the need of Parallel Processing for Machine Learning in

Parallel Computing Graphics Processing Unit GPU and New

What are the benefits of using CUDA for machine learning Quora

Python Performance and GPUs. A status update for using GPU by

GPU Accelerated Data Science with RAPIDS NVIDIA

Description

Product Item: Gpu parallel computing for machine learning in python 2025

GPU parallel computing for machine learning in Python how to 2025, GPU parallel computing for machine learning in Python how to build a parallel computer 2025, GPU parallel computing for machine learning in Python how to 2025, GPU parallel computing for machine learning in Python how to build a parallel computer 2025, Parallel Computing Upgrade Your Data Science with GPU Computing 2025, GitHub pradeepsinngh Parallel Deep Learning in Python 2025, Understanding Data Parallelism in Machine Learning Telesens 2025, Information Free Full Text Machine Learning in Python Main 2025, Parallel Processing of Machine Learning Algorithms by dunnhumby 2025, Deep Learning Frameworks for Parallel and Distributed 2025, A Complete Introduction to GPU Programming With Practical Examples 2025, Parallelizing across multiple CPU GPUs to speed up deep learning 2025, Demystifying GPU Architectures For Deep Learning Part 1 2025, What is the need of Parallel Processing for Machine Learning in 2025, Parallel Computing Graphics Processing Unit GPU and New 2025, What are the benefits of using CUDA for machine learning Quora 2025, Python Performance and GPUs. A status update for using GPU by 2025, GPU Accelerated Data Science with RAPIDS NVIDIA 2025, multithreading Parallel processing on GPU MXNet and CPU using 2025, Beyond CUDA GPU Accelerated Python for Machine Learning on Cross 2025, Parallel Computing Graphics Processing Unit GPU and New 2025, Multi GPU An In Depth Look 2025, MPI Message Passing Interface with Python Parallel computing 2025, GPU parallel computing for machine learning in Python how to 2025, Parallel Computing Graphics Processing Unit GPU and New 2025, What is CUDA Parallel programming for GPUs InfoWorld 2025, Best GPUs for Machine Learning for Your Next Project 2025, Multi GPU An In Depth Look 2025, GPU Computing Princeton Research Computing 2025, Computing GPU memory bandwidth with Deep Learning Benchmarks 2025, Information Free Full Text Machine Learning in Python Main 2025, Massively parallel programming with GPUs Computational 2025, Here s how you can accelerate your Data Science on GPU KDnuggets 2025, How CUDA is Like Turbo for Your Machine Learning Projects CUDA 2025, The Definitive Guide to Deep Learning with GPUs cnvrg.io 2025.

GPU parallel computing for machine learning in Python how to 2025, GPU parallel computing for machine learning in Python how to build a parallel computer 2025, GPU parallel computing for machine learning in Python how to 2025, GPU parallel computing for machine learning in Python how to build a parallel computer 2025, Parallel Computing Upgrade Your Data Science with GPU Computing 2025, GitHub pradeepsinngh Parallel Deep Learning in Python 2025, Understanding Data Parallelism in Machine Learning Telesens 2025, Information Free Full Text Machine Learning in Python Main 2025, Parallel Processing of Machine Learning Algorithms by dunnhumby 2025, Deep Learning Frameworks for Parallel and Distributed 2025, A Complete Introduction to GPU Programming With Practical Examples 2025, Parallelizing across multiple CPU GPUs to speed up deep learning 2025, Demystifying GPU Architectures For Deep Learning Part 1 2025, What is the need of Parallel Processing for Machine Learning in 2025, Parallel Computing Graphics Processing Unit GPU and New 2025, What are the benefits of using CUDA for machine learning Quora 2025, Python Performance and GPUs. A status update for using GPU by 2025, GPU Accelerated Data Science with RAPIDS NVIDIA 2025, multithreading Parallel processing on GPU MXNet and CPU using 2025, Beyond CUDA GPU Accelerated Python for Machine Learning on Cross 2025, Parallel Computing Graphics Processing Unit GPU and New 2025, Multi GPU An In Depth Look 2025, MPI Message Passing Interface with Python Parallel computing 2025, GPU parallel computing for machine learning in Python how to 2025, Parallel Computing Graphics Processing Unit GPU and New 2025, What is CUDA Parallel programming for GPUs InfoWorld 2025, Best GPUs for Machine Learning for Your Next Project 2025, Multi GPU An In Depth Look 2025, GPU Computing Princeton Research Computing 2025, Computing GPU memory bandwidth with Deep Learning Benchmarks 2025, Information Free Full Text Machine Learning in Python Main 2025, Massively parallel programming with GPUs Computational 2025, Here s how you can accelerate your Data Science on GPU KDnuggets 2025, How CUDA is Like Turbo for Your Machine Learning Projects CUDA 2025, The Definitive Guide to Deep Learning with GPUs cnvrg.io 2025.

Gpu parallel computing for machine learning in python 2025

- gpu parallel computing for machine learning in python

- logistic regression is supervised learning

- machine learning online learning algorithms

- machine learning python medium

- neural network python prediction

- python deep learning face recognition

- restricted boltzmann machine deep learning

- python machine learning jupyter notebook

- standard machine learning algorithms

- the best programming language for machine learning